Results

Note: As of June, 2020, we will only be posting new results that exceed the existing state-of-the-art within a given results category.

Please refer to the new technical report for details of the changes.

- Introduction

- LFW Results by Category

- Generating ROC Curves

- Computing Area Under Curve (AUC)

- List of All Methods

Introduction

LFW provides information for supervised learning under two different training paradigms: image-restricted and unrestricted. Under the image-restricted setting, only binary "matched" or "mismatched" labels are given, for pairs of images. Under the unrestricted setting, the identity information of the person appearing in each image is also available, allowing one to potentially form additional image pairs.

An algorithm designed for LFW can also choose to abstain from using this supervised information, or supplement this with additional, outside training data, which may be labeled (matched/mismatched labeling or identity labeling) or label-free. Depending on these decisions, results on LFW will fall into one of the six categories listed at the top of this page.

The use of training data outside of LFW can have a significant impact on recognition performance. For instance, it was shown in Wolf et al.10 that using LFW-a, the version of LFW aligned using a trained commercial alignment system, improved the accuracy of the early Nowak and Jurie method2 from 0.7393 on the funneled images to 0.7912, despite the fact that this method was designed to handle some misalignment.

To enable the fair comparison of different algorithms on LFW, we ask that researchers be specific about what type of outside training data was used in the experiments. The list of methods at the bottom of this page will also provide rough details on outside training data used by each method, with label-free outside training data being indicated with a †, and labeled outside training data being indicated with a ‡.

For more information, see the below techical report and the LFW readme.

Gary B. Huang and Erik Learned-Miller.

Labeled Faces in the Wild: Updates and New Reporting Procedures.

UMass Amherst Technical Report UM-CS-2014-003, 5 pages, 2014.

LFW Results by Category

Results in red indicate methods accepted but not yet published (e.g. accepted to an upcoming conference). Results in green indicate commercial recognition systems whose algorithms have not been published and peer-reviewed. We emphasize that researchers should not be compelled to compare against either of these types of results.

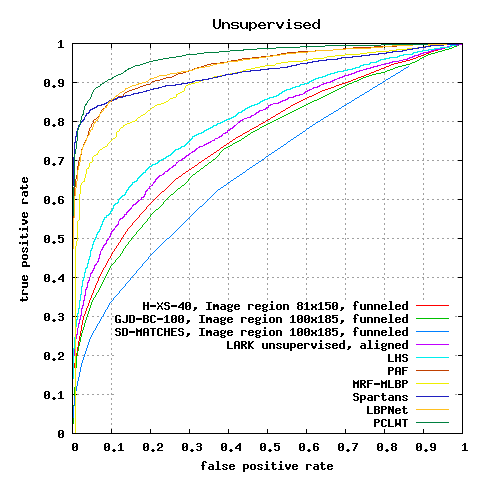

Unsupervised Results

Note: While most categories of results list the mean classification accuracy in the rightmost column, the unsupervised results category gives the Area Under the ROC Curve (AUC). The reason for this is that there is no legitimate way to choose a threshold for the unsupervised results without using labels or label distributions.

| AUC | |

| SD-MATCHES, 125x12512, funneled | 0.5407 |

| H-XS-40, 81x15012, funneled | 0.7547 |

| GJD-BC-100, 122x22512, funneled | 0.7392 |

| LARK unsupervised20, aligned | 0.7830 |

| LHS29, aligned | 0.8107 |

| I-LPQ*24, aligned | forthcoming |

| Pose Adaptive Filter (PAF)31 | 0.9405 |

| MRF-MLBP30 | 0.8994 |

| MRF-Fusion-CSKDA50 | 0.9894 |

| Spartans68 | 0.9428 |

| LBPNet79 | 0.9404 |

| SA-BSIF, WPCA, aligned85 | 0.9318 |

| PLWT112 | 0.9608 |

| PCLWT112 | 0.9688 |

| MS-LZM116 | 0.9515 |

Figure 1: ROC curves averaged over 10 folds of View 2.

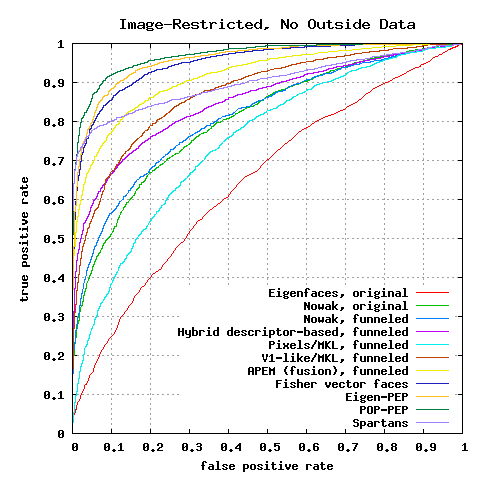

Image-Restricted, No Outside Data Results

| û ± SE | |

| Eigenfaces1, original | 0.6002 ± 0.0079 |

| Nowak2, original | 0.7245 ± 0.0040 |

| Nowak2, funneled3 | 0.7393 ± 0.0049 |

| Hybrid descriptor-based5, funneled | 0.7847 ± 0.0051 |

| 3x3 Multi-Region Histograms (1024)6 | 0.7295 ± 0.0055 |

| Pixels/MKL, funneled7 | 0.6822 ± 0.0041 |

| V1-like/MKL, funneled7 | 0.7935 ± 0.0055 |

| APEM (fusion), funneled25 | 0.8408 ± 0.0120 |

| MRF-MLBP30 | 0.7908 ± 0.0014 |

| Fisher vector faces32 | 0.8747 ± 0.0149 |

| Eigen-PEP49 | 0.8897 ± 0.0132 |

| MRF-Fusion-CSKDA50 | 0.9589 ± 0.0194 |

| POP-PEP58 | 0.9110 ± 0.0147 |

| Spartans68 | 0.8755 ± 0.0021 |

| RSF86 | 0.8881 ± 0.0078 |

Figure 2: ROC curves averaged over 10 folds of View 2.

Unrestricted, No Outside Data Results

Currently no results in this category.

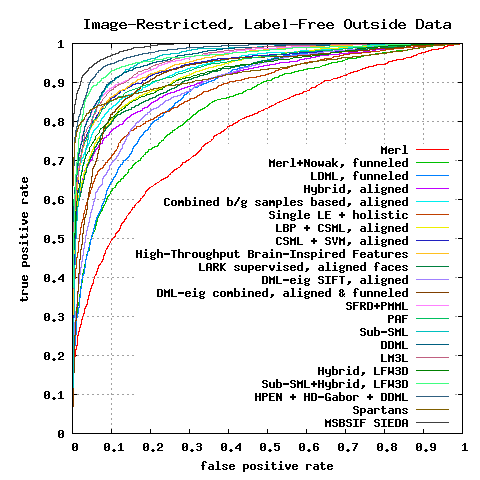

Image-Restricted, Label-Free Outside Data Results

| MERL4 | 0.7052 ± 0.0060 |

| MERL+Nowak4, funneled | 0.7618 ± 0.0058 |

| LDML, funneled8 | 0.7927 ± 0.0060 |

| Hybrid, aligned9 | 0.8398 ± 0.0035 |

| Combined b/g samples based methods, aligned10 | 0.8683 ± 0.0034 |

| NReLU13 | 0.8073 ± 0.0134 |

| Single LE + holistic14 | 0.8122 ± 0.0053 |

| LBP + CSML, aligned15 | 0.8557 ± 0.0052 |

| CSML + SVM, aligned15 | 0.8800 ± 0.0037 |

| High-Throughput Brain-Inspired Features, aligned16 | 0.8813 ± 0.0058 |

| LARK supervised20, aligned | 0.8510 ± 0.0059 |

| DML-eig SIFT21, funneled | 0.8127 ± 0.0230 |

| DML-eig combined21, funneled & aligned | 0.8565 ± 0.0056 |

| Convolutional DBN37 | 0.8777 ± 0.0062 |

| SFRD+PMML28 | 0.8935 ± 0.0050 |

| Pose Adaptive Filter (PAF)31 | 0.8777 ± 0.0051 |

| Sub-SML35 | 0.8973 ± 0.0038 |

| VMRS36 | 0.9110 ± 0.0059 |

| DDML43 | 0.9068 ± 0.0141 |

| LM3L51 | 0.8957 ± 0.0153 |

| Hybrid on LFW3D52 | 0.8563 ± 0.0053 |

| Sub-SML + Hybrid on LFW3D52 | 0.9165 ± 0.0104 |

| HPEN + HD-LBP + DDML61 | 0.9257 ± 0.0036 |

| HPEN + HD-Gabor + DDML61 | 0.9280 ± 0.0047 |

| Spartans68 | 0.8969 ± 0.0036 |

| MSBSIF-SIEDA69 | 0.9463 ± 0.0095 |

| TSML with OCLBP73 | 0.8710 ± 0.0043 |

| TSML with feature fusion73 | 0.8980 ± 0.0047 |

Figure 4: ROC curves averaged over 10 folds of View 2.

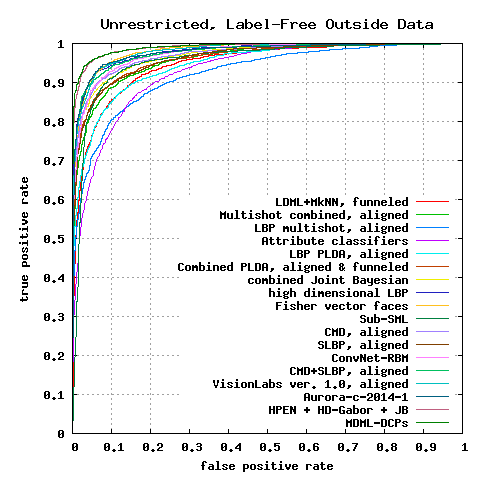

Unrestricted, Label-Free Outside Data Results

| û ± SE | |

| LDML-MkNN, funneled8 | 0.8750 ± 0.0040 |

| Combined multishot, aligned9 | 0.8950 ± 0.0051 |

| LBP multishot, aligned9 | 0.8517 ± 0.0061 |

| Attribute classifiers11 | 0.8525 ± 0.0060 |

| LBP PLDA, aligned17 | 0.8733 ± 0.0055 |

| combined PLDA, funneled & aligned17 | 0.9007 ± 0.0051 |

| combined Joint Bayesian26 | 0.9090 ± 0.0148 |

| high-dim LBP27 | 0.9318 ± 0.0107 |

| Fisher vector faces32 | 0.9303 ± 0.0105 |

| Sub-SML35 | 0.9075 ± 0.0064 |

| VMRS36 | 0.9205 ± 0.0045 |

| ConvNet-RBM42 | 0.9175 ± 0.0048 |

| CMD, aligned22 | 0.9170 ± 0.0110 |

| SLBP, aligned22 | 0.9000 ± 0.0133 |

| CMD+SLBP, aligned22 | 0.9258 ± 0.0136 |

| VisionLabs ver. 1.0, aligned38 | 0.9290 ± 0.0031 |

| Aurora, aligned39 | 0.9324 ± 0.0044 |

| MLBPH+MLPQH+MBSIFH54 | 0.9303 ± 0.0082 |

| HPEN + HD-LBP + JB61 | 0.9487 ± 0.0038 |

| HPEN + HD-Gabor + JB61 | 0.9525 ± 0.0036 |

| MDML-DCPs66 | 0.9558 ± 0.0034 |

Figure 5: ROC curves averaged over 10 folds of View 2.

Unrestricted, Labeled Outside Data Results

| Simile classifiers11 | 0.8472 ± 0.0041 |

| Attribute and Simile classifiers11 | 0.8554 ± 0.0035 |

| Multiple LE + comp14 | 0.8445 ± 0.0046 |

| Associate-Predict18 | 0.9057 ± 0.0056 |

| Tom-vs-Pete23 | 0.9310 ± 0.0135 |

| Tom-vs-Pete + Attribute23 | 0.9330 ± 0.0128 |

| combined Joint Bayesian26 | 0.9242 ± 0.0108 |

| high-dim LBP27 | 0.9517 ± 0.0113 |

| DFD33 | 0.8402 ± 0.0044 |

| TL Joint Bayesian34 | 0.9633 ± 0.0108 |

| face.com r2011b19 | 0.9130 ± 0.0030 |

| Face++40 | 0.9950 ± 0.0036 |

| DeepFace-ensemble41 | 0.9735 ± 0.0025 |

| ConvNet-RBM42 | 0.9252 ± 0.0038 |

| POOF-gradhist44 | 0.9313 ± 0.0040 |

| POOF-HOG44 | 0.9280 ± 0.0047 |

| FR+FCN45 | 0.9645 ± 0.0025 |

| DeepID46 | 0.9745 ± 0.0026 |

| GaussianFace47 | 0.9852 ± 0.0066 |

| DeepID248 | 0.9915 ± 0.0013 |

| TCIT53 | 0.9333 ± 0.0124 |

| DeepID2+55 | 0.9947 ± 0.0012 |

| betaface.com56 | 0.9953 ± 0.0009 |

| DeepID357 | 0.9953 ± 0.0010 |

| insky.so59 | 0.9551 ± 0.0013 |

| Uni-Ubi60 | 0.9900 ± 0.0032 |

| FaceNet62 | 0.9963 ± 0.0009 |

| Baidu64 | 0.9977 ± 0.0006 |

| AuthenMetric65 | 0.9977 ± 0.0009 |

| MMDFR67 | 0.9902 ± 0.0019 |

| CW-DNA-170 | 0.9950 ± 0.0022 |

| Faceall71 | 0.9967 ± 0.0007 |

| JustMeTalk72 | 0.9887 ± 0.0016 |

| Facevisa74 | 0.9955 ± 0.0014 |

| pose+shape+expression augmentation75 | 0.9807 ± 0.0060 |

| ColorReco76 | 0.9940 ± 0.0022 |

| Asaphus77 | 0.9815 ± 0.0039 |

| Daream78 | 0.9968 ± 0.0009 |

| Dahua-FaceImage80 | 0.9978 ± 0.0007 |

| Skytop Gaia82 | 0.9630 ± 0.0023 |

| CNN-3DMM estimation83 | 0.9235 ± 0.0129 |

| Samtech Facequest84 | 0.9971 ± 0.0018 |

| XYZ Robot87 | 0.9895 ± 0.0020 |

| THU CV-AI Lab88 | 0.9973 ± 0.0008 |

| dlib90 | 0.9938 ± 0.0027 |

| Aureus91 | 0.9920 ± 0.0030 |

| YouTu Lab, Tencent63 | 0.9980 ± 0.0023 |

| Orion Star92 | 0.9965 ± 0.0032 |

| Yuntu WiseSight93 | 0.9943 ± 0.0045 |

| PingAn AI Lab89 | 0.9980 ± 0.0016 |

| Turing12394 | 0.9940 ± 0.0040 |

| Hisign95 | 0.9968 ± 0.0030 |

| VisionLabs V2.038 | 0.9978 ± 0.0007 |

| Deepmark96 | 0.9923 ± 0.0016 |

| Force Infosystems97 | 0.9973 ± 0.0028 |

| ReadSense98 | 0.9982 ± 0.0007 |

| CM-CV&AR99 | 0.9983 ± 0.0024 |

| sensingtech100 | 0.9970 ± 0.0008 |

| Glasssix101 | 0.9983 ± 0.0018 |

| icarevision102 | 0.9977 ± 0.0030 |

| Easen Electron81 | 0.9983 ± 0.0006 |

| yunshitu103 | 0.9987 ± 0.0012 |

| RemarkFace104 | 0.9972 ± 0.0020 |

| IntelliVision105 | 0.9973 ± 0.0027 |

| senscape106 | 0.9930 ± 0.0053 |

| Meiya Pico107 | 0.9972 ± 0.0008 |

| Faceter.io108 | 0.9978 ± 0.0008 |

| Pegatron109 | 0.9958 ± 0.0013 |

| CHTFace110 | 0.9960 ± 0.0025 |

| FRDC111 | 0.9972 ± 0.0029 |

| YI+AI113 | 0.9983 ± 0.0024 |

| Aratek114 | 0.9972 ± 0.0021 |

| Cylltech115 | 0.9982 ± 0.0023 |

| TerminAI117 | 0.9980 ± 0.0016 |

| ever.ai118 | 0.9985 ± 0.0020 |

| Camvi119 | 0.9987 ± 0.0018 |

| IFLYTEK-CV120 | 0.9980 ± 0.0024 |

| DRD, CTBC Bank121 | 0.9981 ± 0.0033 |

| Innovative Technology122 | 0.9988 ± 0.0004 |

| Oz Forensics123 | 0.9987 ± 0.0018 |

Figure 6: ROC curves averaged over 10 folds of View 2.

[click image to toggle zoom]

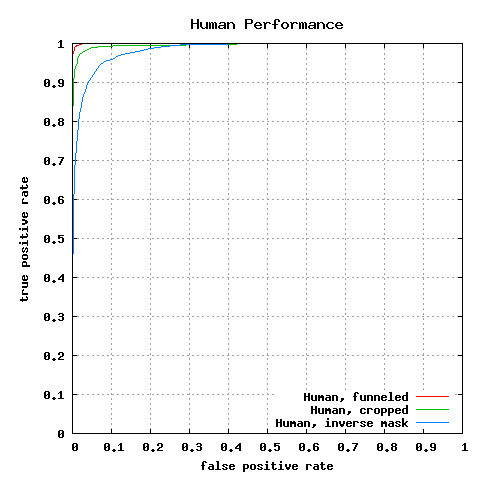

Human performance, measured through Amazon Mechanical Turk

See note on human performance below.

| Human, funneled11 | 0.9920 |

| Human, cropped11 | 0.9753 |

| Human, inverse mask11 | 0.9427 |

Figure 7: ROC curves averaged over 10 folds of View 2.

Note on human performance:

The experiments on human performance, as described by [11], did not control for whether subjects had prior exposure to the people pictured in the test sets. It is probable that the human subjects were familiar with the appearance of many of the identities in the test sets, since many of them are famous politicians, sports figures, and actors. This is tantamount to the human subjects having labeled training data for some subjects that were in the test sets, which is disallowed under all LFW protocols. That is, the human performance experiments do not conform to any of the protocols described in the update to LFW technical report.

While we believe that these results are interesting and worth reporting as a separate category, they may somewhat overestimate the accuracy of humans on this task when the identities of test images are not people whose appearance is already known.

Generating ROC Curves

The following scripts were used to generate the above ROC curves using gnuplot:

plot_lfw_roc_unsupervised.p

plot_lfw_roc_restricted_strict.p

plot_lfw_roc_restricted_labelfree.p

plot_lfw_roc_unrestricted_labelfree.p

plot_lfw_roc_unstricted_labeled.p

plot_lfw_roc_human.p

The scripts take in one text file for each method, containing on each line a point on the ROC curve, i.e. average true positive rate, followed by average false positive rate, separated by a single space. Additional methods can be added to the script by adding on to the plot command, e.g.

plot "nowak-original-roc.txt" using 2:1 with lines title "Nowak, original", \

"nowak-funneled-roc.txt" using 2:1 with lines title "Nowak, funneled", \

"new-method-roc.txt" using 2:1 with lines title "New Method"

Note that each point on the curve represents the average over the 10

folds of (false positive rate, true positive rate) for a fixed

threshold.Existing ROC files can be downloaded here:

Unsupervised

- H-XS-40 - lbp_eurasip2009_jrs-roc.txt

- GJD-BC-100 - gabor_eurasip2009_jrs-roc.txt

- SD-MATCHES - sift_eurasip2009_jrs-roc.txt

- LARK - Haejong_Milanfar_LARK_unsupervised.txt

- LHS - lhs_roc_view2.txt

- PAF - paf_cvpr2013.txt

- MRF-MLBP - mrf_mlbp_roc.txt

- Spartans - Spartans_Unsupervised.txt

- LBPNet - lbpnet_roc_lfw_unsu.txt

- PLWT - PLWT.txt

- PCLWT - PCLWT.txt

Image-Restricted, No Outside Data

- Eigenfaces - eigenfaces-original-roc.txt

- Nowak, original - nowak-original-roc.txt

- Nowak, funneled - nowak-funneled-roc.txt

- Hybrid descriptor-based - combined16.txt

- Pixels/MKL - funneled-v1-like-roc.txt

- V1-like/MKL - funneled-v1-like-roc.txt

- APEM (fusion) - apem-funnel-roc.txt

- Fisher vector faces - fisher-vector-faces-restricted.txt

- Eigen-PEP - ROC-LFW-EigenPEP.txt

- POP-PEP - POP-PEP.txt

- Spartans - Spartans_Image_Restricted_No_Outside_Data.txt

Image-Restricted, Label-Free Outside Data

- MERL - merl-roc.txt

- MERL+Nowak - combined-roc.txt

- LDML - guillaumin-ldml.txt

- Hybrid - hybrid_with_sift_aligned.txt

- Combined b/g samples based methods - accv09-wolf-hassner-taigman-roc.txt

- Single LE + holistic - LE_bestsingle.txt

- LBP + CSML - aligned_lbp_sqrt_csml_roc.txt

- CSML + SVM - aligned_csml_svm_roc.txt

- High-Throughput Brain-Inspired Features - pinto-cox-fg2011-roc.txt

- LARK supervised - Haejong_Milanfar_LARK_supervised.txt

- DML-eig SIFT - dml_eig_SIFT_restricted_jmlr.txt

- DML-eig combined - dml_eig_combined_restricted_jmlr.txt

- SFRD+PMML - LFW_ROC_SFRD+PMML.txt

- Pose Adaptive Filter (PAF) - paf_cvpr2013.txt

- Sub-SML - sub-sml_iccv2013_combined_restricted.txt

- DDML - ddml_combined_restricted.txt

- LM3L - lm3l_restricted_lfw.txt

- Hybrid on LFW3D - Hybrid_on_LFW3D.txt

- Sub-SML + Hybrid on LFW3D - Sub-SML_and_Hybrid_on_LFW3D.txt

- HPEN + HD-LBP + DDML - score_lfw_restricted_HPEN_HDLBP_DDML.txt

- HPEN + HD-Gabor + DDML - score_lfw_restricted_HPEN_HDGabor_DDML.txt

- Spartans - Spartans_Image_Restricted_Label_Free_Outside_Data.txt

- MSBSIF SIEDA - MSBSIF_SIEDA.txt

Unrestricted, Label-Free Outside Data

- LDML-MkNN - guillaumin-ldmlmknn.txt

- Combined multishot - multishot_unrestricted_bmvc09.txt

- LBP multishot - multishot_unrestricted_single_feature_bmvc09.txt

- Attribute classifiers - kumar_attrs_pami2011.txt

- LBP PLDA - plda_lbp_unrestricted_pami.txt

- combined PLDA - plda_combined_unrestricted_pami.txt

- combined Joint Bayesian - joint_bayesian_combined_unrestricted_eccv12.txt

- high-dim LBP - high_dim_LBP_unrestricted_cvpr13.txt

- Fisher vector faces - fisher-vector-faces-unrestricted.txt

- Sub-SML - sub-sml_iccv2013_combined_unrestricted.txt

- CMD - ROC_CovarianceMatrix.txt

- SLBP - ROC_SLBP.txt

- ConvNet-RBM - convnet-rbm_unrestrict_iccv13.txt

- CMD - ROC_Combined.txt

- VisionLabs ver. 1.0 - visionlabs-unrestricted.txt

- Aurora - Aurora-c-2014-1--facerec.com.txt

- HPEN + HD-LBP + JB - score_lfw_unrestricted_HPEN_HDLBP_JB.txt

- HPEN + HD-Gabor + JB - score_lfw_unrestricted_HPEN_HDGabor_JB.txt

- MDML-DCPs - MDMLDCPs_ROC.txt

Unrestricted, Labeled Outside Data

- Simile classifiers - kumar_similes_pami2011.txt

- Attribute and Simile classifiers - kumar_attrs_sims_pami2011.txt

- Multiple LE + comp - LE_combine.txt

- Associate-Predict - associatepredict_cvpr11.txt

- Tom-vs-Pete - berg_belhumeur_bmvc12.txt

- Tom-vs-Pete + Attribute - berg_belhumeur_attrs_bmvc12.txt

- combined Joint Bayesian - joint_bayesian_combined_restricted_outside_eccv12.txt

- high-dim LBP - high_dim_LBP_restricted_outside_cvpr13.txt

- DFD - DFD_unsupervised.txt

- TL Joint Bayesian - XudongCao_TransferLearning_FaceVerification_ICCV2013.txt

- face.com r2011b - taigman_wolf_r2011b.txt

- Face++ - faceplusplus_lfw_result.txt

- DeepFace - deepface_ensemble.txt

- ConvNet-RBM - convnet-rbm_celebfaces_iccv13.txt

- POOF-gradhist - berg_belhumeur_poofs_cvpr13_gradhist.txt

- POOF-HOG - berg_belhumeur_poofs_cvpr13_hog.txt

- FR+FCN - canonical_cnn_lholistic_outside.txt

- DeepID - YiSun_DeepID_CelebFaces+_TL_CVPR2014.txt

- GaussianFace - gaussianface.txt

- DeepID2 - YiSun_DeepID2.txt

- TCIT - tcit_roc.txt

- DeepID2+ - deepid2plus_arxiv1412.1265.txt

- betaface.com - ROC2017_Betaface_SDK32_greyscale_noalign_DeepID3_512.txt

- DeepID3 - DeepID3_arXiv_1502.00873.txt

- insky.so - insky-roc.txt

- Uni-Ubi - roc_uni-ubi.txt

- Baidu - BaiduIDLFinal.TPFP

- AuthenMetric - authenmetric_lfw_roc_07-28-2015.txt

- MM-DFR - MMDFR_ROC.txt

- CW-DNA-1 - CW-DNA-1.txt

- Faceall - FaceALL_V2_LFW_ROC.txt

- JustMeTalk - justmetalk_roc_lfw.txt

- Facevisa - facevisa2.txt

- pose+shape+expression augmentation - augmented_CNN_lfw.txt

- ColorReco - roc_colorreco.txt

- Daream - daream_lfw_roc.txt

- Dahua-FaceImage - dahua-faceimage_lfw_roc.txt

- Easen Electron - EasenElectron3_lfw_ROC.txt

- Skytop Gaia - gskytop_lfw_roc.txt

- CNN-3DMM estimation - 3DMM_CNN_lfw.txt

- samtech facequest - samtech_facequest_testROC_avg.txt

- XYZ Robot - XYZ-Robot_LFW_ROC.txt

- THU CV-AI Lab - thu_roc.txt

- PingAn AI Lab - PingAn_ai_tpr_fpr.txt

- dlib - dlib_LFW_roc_curve.txt

- Aureus - Aureus5.6_ROC.txt

- YouTu Lab, Tencent - tencent-bestimage-roc.txt

- Yuntu WiseSight - Yuntu_Tech_ROC.txt

- Hisign Technology - hisign_lfw_roc.txt

- VisionLabs V2.0 - VL_LFW_ROC.txt

- Deepmark - deepmark_roc.txt

- Force Infosystems - forceinfosystem_ROC_avg.txt

- ReadSense - readsense-v1-roc.txt

- CM-CV&AR - CV_AR_lfw_roc.txt

- sensingtech - sensingtech_lfw_roc.txt

- Glasssix - Glasssix_roc_result.txt

- icarevision - icarevision_roc.txt

- yunshitu - yunshitu_face_r100_v8_lfw_roc.txt

- IntelliVision - intellivision_roc.txt

- senscape - senscape_roc.txt

- Meiya Pico - MeiyaPico_roc_result.txt

- Faceter.io - lfw_tpfp_faceter.txt

- Pegatron - pegatron_20171121_lfw_roc.txt

- CHTFace - chttl_roc_curve_0.9960.txt

- FRDC - FRDC_ROC.txt

- YI+AI - yi_ai_lfw_roc.txt

- Aratek - aratek_faceimage_lfw_roc.txt

- Cylltech - Cylltech_LFW_ROC.txt

- TerminAI - TerminAI_roc.txt

- ever.ai - everai_lfw_roc.txt

- DRD, CTBC Bank - CTBC_DRD_lfw_roc.txt

- Innovative Technology - ITL_FaceIT2_roc.txt

- Oz Forensics - Oz_Forensics_roc.txt

Human Performance (Amazon Mechanical Turk)

- original - kumar_human_orig.txt

- cropped - kumar_human_crop.txt

- inverse mask - kumar_human_inv.txt

Notes: gnuplot is multi-platform and freely distributed, and can be downloaded here. plot_lfw_roc_unsupervised.p can either be run as a shell script on Unix/Linux machines (e.g. chmod u+x plot_lfw_roc_unsupervised.p; ./plot_lfw_roc_unsupervised.p) or loaded through gnuplot (e.g. at the gnuplot command line gnuplot> load "plot_lfw_roc_unsupervised.p").

Computing Area Under Curve (AUC)

The following Matlab function is used to compute area under the ROC curve (AUC) for unsupervised methods (as explained in the update to LFW technical report):

lfw_auc.m

Methods

- Matthew A. Turk and Alex P. Pentland.

Face Recognition Using Eigenfaces.

Computer Vision and Pattern Recognition (CVPR), 1991.

[pdf] - Eric Nowak and Frederic Jurie.

Learning visual similarity measures for comparing never seen objects.

Computer Vision and Pattern Recognition (CVPR), 2007.

[pdf]

[webpage]

Results were obtained using the binary available from the paper's webpage. View 1 of the database was used to compute the cut-off threshold used in computing mean classification accuracy on View 2. For each of the 10 folds of View 2 of the database, 9 of the sets were used as training, the similarity measures were computed for the held out test set, and the threshold value was used to classify pairs as matched or mismatched. This procedure was performed both on the original images as well as the set of aligned images from the funneled parallel database.

We used the same parameters given on the paper's webpage, with C=1 for the SVM, specifically:

pRazSimiERCF -verbose 2 -ntrees 5 -maxleavesnb 25000 -nppL 100000 -ncondtrial 1000 -nppT 1000 -wmin 15 -wmax 100 -neirelsize 1 -svmc 1

- Gary B. Huang, Vidit Jain, and Erik Learned-Miller.

Unsupervised joint alignment of complex images.

International Conference on Computer Vision (ICCV), 2007.

[pdf]

[webpage]

Face images were aligned using publicly available source code from project webpage. - Gary B. Huang, Michael J. Jones, and Erik Learned-Miller.

LFW Results Using a Combined Nowak Plus MERL Recognizer.

Faces in Real-Life Images Workshop in European Conference on Computer Vision (ECCV), 2008.

[pdf]

[commercial system, see note at top]

†Face images were aligned using a commercial system that attempts to identify nine facial landmark points through Viola-Jones type landmark detectors. - Lior Wolf, Tal Hassner, and Yaniv Taigman.

Descriptor Based Methods in the Wild.

Faces in Real-Life Images Workshop in European Conference on Computer Vision (ECCV), 2008.

[pdf]

[webpage] - Conrad Sanderson and Brian C. Lovell.

Multi-Region Probabilistic Histograms for Robust and Scalable Identity Inference.

International Conference on Biometrics (ICB), 2009.

[pdf] - Nicolas Pinto, James J. DiCarlo, and David D. Cox.

How far can you get with a modern face recognition test set using only simple features? Computer Vision and Pattern Recognition (CVPR), 2009.

[pdf] - Matthieu Guillaumin, Jakob Verbeek, and Cordelia Schmid.

Is that you? Metric Learning Approaches for Face Identification.

International Conference on Computer Vision (ICCV), 2009.

[pdf]

[webpage]

†SIFT features were extracted at nine facial feature points using the detector of Everingham, Sivic, and Zisserman, 'Hello! My name is... Buffy' - automatic naming of characters in TV video, BMVC, 2006. - Yaniv Taigman, Lior Wolf, and Tal Hassner.

Multiple One-Shots for Utilizing Class Label Information.

British Machine Vision Conference (BMVC), 2009.

[pdf]

[webpage]

†Used LFW-a, a version of LFW aligned using a commercial, fiducial-points based alignment system. - Lior Wolf, Tal Hassner, and Yaniv Taigman.

Similarity Scores based on Background Samples.

Asian Conference on Computer Vision (ACCV), 2009.

[pdf]

†Used LFW-a. - Neeraj Kumar, Alexander C. Berg, Peter N. Belhumeur, and Shree K. Nayar.

Attribute and Simile Classifiers for Face Verification.

International Conference on Computer Vision (ICCV), 2009.

[pdf]

[webpage]

Neeraj Kumar, Alexander C. Berg, Peter N. Belhumeur, and Shree K. Nayar.

Describable Visual Attributes for Face Verification and Image Search.

IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI), October 2011.

[pdf]

[webpage]

†A commercial face detector - Omron, OKAO vision - was used to detect fiducial point locations. These locations were used to align the images and extract features from particular face regions.

‡Attribute classifiers (e.g. Brown Hair) were trained using outside data and Amazon Mechanical Turk labelings, and simile classifiers (e.g. mouth similar to Angelina Jolie) were trained using images from PubFig. The outputs of these classifiers on LFW images were used as features in the recognition system.

The computed attributes for all images in LFW can be obtained in this file: lfw_attributes.txt. The file format and meaning are described on this page, and further information on the attributes can be found on the project website. - Javier Ruiz-del-Solar, Rodrigo Verschae, and Mauricio Correa.

Recognition of Faces in Unconstrained Environments: A Comparative Study.

EURASIP Journal on Advances in Signal Processing (Recent Advances in Biometric Systems: A Signal Processing Perspective), Vol. 2009, Article ID 184617, 19 pages.

[pdf] - Vinod Nair and Geoffrey E. Hinton.

Rectified Linear Units Improve Restricted Boltzmann Machines.

International Conference on Machine Learning (ICML), 2010.

[pdf]

†Used Machine Perception Toolbox from MPLab, UCSD to detect eye location, manually corrected eye coordinates for worst ~2000 detections (and therefore not conforming to strict LFW protocol), used coordinates to rotate and scale images.

†Used face data outside of LFW for unsupervised feature learning. - Zhimin Cao, Qi Yin, Xiaoou Tang, and Jian Sun.

Face Recognition with Learning-based Descriptor.

Computer Vision and Pattern Recognition (CVPR), 2010.

[pdf]

†Landmarks are detected using the fiducial point detector of Liang, Xiao, Wen, Sun, Face Alignment via Component-based Discriminative Search, ECCV, 2008, which are then used to extract face component images for feature computation.

‡The "+ comp" method uses a pose-adaptive approach, where LFW images are labeled as being frontal, left facing, or right facing, using three images selected from the Multi-PIE data set. - Hieu V. Nguyen and Li Bai.

Cosine Similarity Metric Learning for Face Verification.

Asian Conference on Computer Vision (ACCV), 2010.

[pdf]

†Used LFW-a. - Nicolas Pinto and David Cox.

Beyond Simple Features: A Large-Scale Feature Search Approach to Unconstrained Face Recognition.

International Conference on Automatic Face and Gesture Recognition (FG), 2011.

[pdf]

†Used LFW-a. - Peng Li, Yun Fu, Umar Mohammed, James H. Elder, and Simon J.D. Prince.

Probabilistic Models for Inference About Identity.

IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI), vol. 34, no. 1, pp. 144-157, Jan. 2012.

[pdf]

[webpage]

†Used LFW-a. - Qi Yin, Xiaoou Tang, and Jian Sun.

An Associate-Predict Model for Face Recognition.

Computer Vision and Pattern Recognition (CVPR), 2011.

[pdf]

†Four landmarks are detected using a standard facial point detector and used to determine twelve facial components.

‡The recognition system makes use of 200 identities from the Multi-PIE data set, covering 7 poses and 4 illumination conditions for each identity. - Yaniv Taigman and Lior Wolf.

Leveraging Billions of Faces to Overcome Performance Barriers in Unconstrained Face Recognition.

ArXiv e-prints, 2011.

[pdf]

[commercial system, see note at top]

† ‡A commercial recognition system, making use of outside training data, is tested on LFW. - Hae Jong Seo and Peyman Milanfar.

Face Verification Using the LARK Representation.

IEEE Transactions on Information Forensics and Security, 2011.

[pdf]

†Used LFW-a. - Yiming Ying and Peng Li.

Distance Metric Learning with Eigenvalue Optimization.

Journal of Machine Learning Research (Special Topics on Kernel and Metric Learning), 2012.

[pdf]

†Used LFW-a, and features extracted from facial feature points of Guillaumin et al., 2009. - Chang Huang, Shenghuo Zhu, and Kai Yu.

Large Scale Strongly Supervised Ensemble Metric Learning, with Applications to Face Verification and Retrieval.

NEC Technical Report TR115, 2011.

[pdf]

[arxiv]

†Used LFW-a. - Thomas Berg and Peter N. Belhumeur.

Tom-vs-Pete Classifiers and Identity-Preserving Alignment for Face Verification.

British Machine Vision Conference (BMVC), 2012.

[pdf]

† ‡Outside training data is used in alignment and recognition systems. - Sibt ul Hussain, Thibault Napoléon, and Fréderic Jurie.

Face Recognition Using Local Quantized Patterns.

British Machine Vision Conference (BMVC), 2012.

[pdf]

[webpage/code]

†Used LFW-a. - Haoxiang Li, Gang Hua, Zhe Lin, Jonathan Brandt, and Jianchao Yang.

Probabilistic Elastic Matching for Pose Variant Face Verification.

Computer Vision and Pattern Recognition (CVPR), 2013.

[pdf] - Dong Chen, Xudong Cao, Liwei Wang, Fang Wen, and Jian Sun.

Bayesian Face Revisited: A Joint Formulation.

European Conference on Computer Vision (ECCV), 2012.

[pdf]

†Used landmark detector for image alignment.

‡Used outside training data in recognition system (for one set of results). - Dong Chen, Xudong Cao, Fang Wen, and Jian Sun.

Blessing of Dimensionality: High-dimensional Feature and Its Efficient Compression for Face Verification.

Computer Vision and Pattern Recognition (CVPR), 2013.

[pdf]

†Extracted features at landmarks detected using Cao et al., Face Alignment by Explicit Shape Regression, CVPR 2012.

‡Used outside training data in recognition system (for one set of results). - Zhen Cui, Wen Li, Dong Xu, Shiguang Shan, and Xilin Chen.

Fusing Robust Face Region Descriptors via Multiple Metric Learning for Face Recognition in the Wild.

Computer Vision and Pattern Recognition (CVPR), 2013.

[pdf]

†Used commercial face alignment software. - Gaurav Sharma, Sibt ul Hussain, Fréderic Jurie.

Local Higher-Order Statistics (LHS) for Texture Categorization and Facial Analysis.

European Conference on Computer Vision (ECCV), 2012.

[pdf]

†Used LFW-a. - Shervin Rahimzadeh Arashloo and Josef Kittler.

Efficient Processing of MRFs for Unconstrained-Pose Face Recognition.

Biometrics: Theory, Applications and Systems, 2013. - Dong Yi, Zhen Lei, and Stan Z. Li.

Towards Pose Robust Face Recognition.

Computer Vision and Pattern Recognition (CVPR), 2013.

[pdf]

†Used outside training data for alignment. - Karen Simonyan, Omkar M. Parkhi, Andrea Vedaldi, and Andrew Zisserman.

Fisher Vector Faces in the Wild.

British Machine Vision Conference (BMVC), 2013.

[pdf]

[webpage]

†Used face landmark detector, trained using Everingham et al., "Taking the bite out of automatic naming of characters in TV video", Image and Vision Computing, 2009. (Used only for unsupervised setting results.) - Zhen Lei, Matti Pietikainen, and Stan Z. Li.

Learning Discriminant Face Descriptor.

IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI), 24 July 2013.

[pdf]

‡Used outside training data (FERET images including identity information) to learn descriptor. - Xudong Cao, David Wipf, Fang Wen, and Genquan Duan.

A Practical Transfer Learning Algorithm for Face Verification.

International Conference on Computer Vision (ICCV), 2013.

[webpage]

‡Used outside training data in recognition system. - Qiong Cao, Yiming Ying, and Peng Li.

Similarity Metric Learning for Face Recognition.

International Conference on Computer Vision (ICCV), 2013.

[pdf]

†Used LFW-a. - Oren Barkan, Jonathan Weill, Lior Wolf, and Hagai Aronowitz.

Fast High Dimensional Vector Multiplication Face Recognition.

International Conference on Computer Vision (ICCV), 2013.

[pdf]

†Used LFW-a. - Gary B. Huang, Honglak Lee, and Erik Learned-Miller.

Learning Hierarchical Representations for Face Verification with Convolutional Deep Belief Networks.

Computer Vision and Pattern Recognition (CVPR), 2012.

[pdf]

[webpage]

†Used LFW-a. - VisionLabs

V2.0

Brief method description:

We follow the Unrestricted, Labeled Outside Data protocol. Our system consists of face detection, face alignment and face descriptor extraction. We train three DNN models using multiple losses and a training dataset with approximately 6M images from multiple sources, containing 460K people (the training dataset has no intersection with LFW). At test time we use original LFW images processed by our production pipeline. The similarity between image pairs is measured with the Euclidean distance.

V1.0

Brief method description:

The method makes use of metric learning and dense local image descriptors. Results are reported for the unrestricted training setup, †using LFW-a aligned images. External data is only used implicitly for face alignment.

[webpage]

[commercial system, see note at top] - Aurora Computer Services Ltd: Aurora-c-2014-1

[pdf] Technical Report

[webpage]

Brief method description:

The face recognition technology is comprised of Aurora's proprietary algorithms, machine learning and computer vision techniques. We report results using the unrestricted training protocol, applied to the view 2 ten-fold cross validation test, using images provided by the LFW website, including the †aligned and funnelled sets and some external data used solely for alignment purposes.

[commercial system, see note at top] - Face++

[pdf] Technical Report 2015

[pdf] Technical Report 2014

[webpage]

Brief method description:

We designed a simple straightforward deep convolutional network, trained with 5 millions of labeled web-collected data† ‡. We extracted face representation from 4 face regions and applied a simple L2 norm to predict whether the pair of faces have the same identity.

[commercial system, see note at top]

-

Yaniv Taigman, Ming Yang, Marc'Aurelio Ranzato, Lior Wolf.

DeepFace: Closing the Gap to Human-Level Performance in Face Verification.

Computer Vision and Pattern Recognition (CVPR), 2014.

[webpage]

† ‡Labeled outside data

-

Yi Sun, Xiaogang Wang, and Xiaoou Tang.

Hybrid Deep Learning for Face Verification.

International Conference on Computer Vision (ICCV), 2013.

[pdf]

†Label-free outside data

† ‡Labeled outside data

-

Junlin Hu, Jiwen Lu, and Yap-Peng Tan.

Discriminative Deep Metric Learning for Face Verification in the Wild.

Computer Vision and Pattern Recognition (CVPR), 2014.

[pdf]

†Used LFW-a

-

Thomas Berg and Peter N. Belhumeur.

POOF: Part-Based One-vs-One Features for Fine-Grained Categorization, Face Verification, and Attribute Estimation.

Computer Vision and Pattern Recognition (CVPR), 2013.

[pdf]

† ‡Outside training data is used in alignment and recognition systems.

-

Zhenyao Zhu, Ping Luo, Xiaogang Wang, and Xiaoou Tang.

Recover Canonical-View Faces in the Wild with Deep Neural Networks.

[pdf]

[commercial system, see note at top]

† ‡Outside training data is used in alignment and recognition systems.

-

Yi Sun, Xiaogang Wang, and Xiaoou Tang.

Deep Learning Face Representation from Predicting 10,000 Classes.

Computer Vision and Pattern Recognition (CVPR), 2014.

[pdf]

† ‡Outside training data is used in alignment and recognition systems.

-

Chaochao Lu and Xiaoou Tang.

Surpassing Human-Level Face Verification Performance on LFW with GaussianFace.

[pdf]

[commercial system, see note at top]

† ‡Outside training data is used in alignment and recognition systems.

-

Yi Sun, Xiaogang Wang, and Xiaoou Tang.

Deep Learning Face Representation by Joint Identification-Verification.

[pdf]

[commercial system, see note at top]

† ‡Outside training data is used in alignment and recognition systems.

-

Haoxiang Li, Gang Hua, Xiaohui Shen, Zhe Lin, and Jonathan Brandt.

Eigen-PEP for Video Face Recognition.

Asian Conference on Computer Vision (ACCV), 2014.

[pdf]

-

Shervin Rahimzadeh Arashloo and Josef Kittler.

Class-Specific Kernel Fusion of Multiple Descriptors for Face Verification Using Multiscale Binarised Statistical Image Features. IEEE Transactions on Information Forensics and Security, 2014.

[pdf]

-

Junlin Hu, Jiwen Lu, Junsong Yuan, and Yap-Peng Tan.

Large Margin Multi-Metric Learning for Face and Kinship Verification in the Wild.

Asian Conference on Computer Vision (ACCV), 2014.

[pdf]

†Used LFW-a

-

Tal Hassner, Shai Harel*, Eran Paz* and Roee Enbar. *equal contribution

Effective Face Frontalization in Unconstrained Images.

Computer Vision and Pattern Recognition (CVPR), 2015.

[pdf]

†Outside data used by 3rd party facial feature detector

Also see LFW3D - collection of frontalized LFW images, under LFW resources.

- Taiwan Colour & Imaging Technology (TCIT)

Brief method description:

TCIT calculates the average position of the facial area and judges the identical person or other person by face recognition using the facial area. Face Feature Positioning is applied to get the face data template which is used to verify different faces.

[webpage]

[commercial system, see note at top]

-

Abdelmalik Ouamane, Bengherabi Messaoud, Abderrezak Guessoum, Abdenour Hadid, and Mohamed Cheriet.

Multi-scale Multi-descriptor Local Binary Features and Exponential Discriminant Analysis for Robust Face Authentication.

International Conference on Image Processing (ICIP), 2014.

†Used LFW-a

-

Yi Sun, Xiaogang Wang, and Xiaoou Tang.

Deeply Learned Face Representations are Sparse, Selective, and Robust.

arXiv:1412.1265, 2014, 2014.

[pdf]

[commercial system, see note at top]

- betaface.com

Brief method description:

The model of our system integrated in SDK 3.2 is a single medium sized deep CNN, embedding dimension is 512 (accuracy of model variant with dimension 128 is 0.993). The training and test follow the Unrestricted, Labeled Outside Data protocol. Training was done on multiple datasets, over 9 million faces and 80000 identities in total. Input is a tight greyscale face bounding box derived from face detector output without further alignment.

[webpage]

[commercial system, see note at top]

-

Yi Sun, Ding Liang, Xiaogang Wang, and Xiaoou Tang.

DeepID3: Face Recognition with Very Deep Neural Networks.

arXiv:1502.00873, 2014.

[pdf]

[commercial system, see note at top]

-

Haoxiang Li, Gang Hua.

Hierarchical-PEP Model for Real-world Face Recognition.

Computer Vision and Pattern Recognition (CVPR), 2015.

[pdf]

-

insky.so

Brief method description:

We used original LFW images to run the test procedure. Our system choose the right images according to the requirement auto and output the facial recognition results. And then, using the standard method to get the ROC curve. We have not do training process using LFW images.

[webpage]

[commercial system, see note at top]

-

Uni-Ubi

Brief method description:

In test, we have used original LFW images, converted to greyscale, auto-aligned with our face detector and alignment system and followed unrestricted protocol with labeled outside data results, LFW was not used for training or fine-tuning.

[webpage]

[commercial system, see note at top]

-

Xiangyu Zhu, Zhen Lei, Junjie Yan, Dong Yi, and Stan Z. Li.

High-Fidelity Pose and Expression Normalization for Face Recognition in the Wild.

Computer Vision and Pattern Recognition (CVPR), 2015.

[pdf]

-

Florian Schroff, Dmitry Kalenichenko, and James Philbin.

FaceNet: A Unified Embedding for Face Recognition and Clustering.

Computer Vision and Pattern Recognition (CVPR), 2015.

[pdf]

-

YouTu Lab, Tencent

Brief method description:

We followed the Unrestricted, Labeled Outside Data protocol. YouTu Celebrities Face (YCF) dataset is used as training set which contains about 20,000 individuals and 2 million face images. With a multi-machine and multi-GPU tensorflow cluster, three extremely deep inception-resnet-like deep networks (depth= 360, 540, 720) are trained to accomplish face verification tasks. With final fc layer output as features, the most powerful single model can reach 0.9977 mean accuracy.

[webpage]

[commercial system, see note at top]

-

Jingtuo Liu, Yafeng Deng, Tao Bai, Zhengping Wei, and Chang Huang; Baidu

Targeting Ultimate Accuracy: Face Recognition via Deep Embedding.

[pdf]

[commercial system, see note at top]

-

AuthenMetric

Brief method description:

The system consists of a workflow of face detection, face alignment, face feature extraction, and face matching, all using our own algorithms. 25 face feature extraction models were trained using a deep network with a training set of 500,000 face images of 10,000 individuals (no LFW subjects are included in the training set), each on a different face patch. The face matching (similarity of two faces) module was trained using a deep metric learning network, where a face is represented as the concatenation of the 25 feature vectors. The training and test follow the Unrestricted, Labeled Outside Data protocol.

[webpage]

[commercial system, see note at top]

-

Changxing Ding, Jonghyun Choi, Dacheng Tao, and Larry S. Davis.

Multi-Directional Multi-Level Dual-Cross Patterns for Robust Face Recognition.

Pattern Analysis and Machine Intelligence, Issue 99, July 2015.

[pdf]

-

Changxing Ding and Dacheng Tao.

Robust Face Recognition via Multimodal Deep Face Representation.

To appear in Transactions on Multimedia.

[pdf]

-

Felix Juefei-Xu, Khoa Luu, and Marios Savvides.

Spartans: Single-Sample Periocular-Based Alignment-Robust Recognition Technique Applied to Non-Frontal Scenarios.

Image Processing, Volume 24, Issue 12, August 2015.

[pdf]

-

Abdelmalik Ouamane, Messaoud Bengherabi, Abdenour Hadid and Mohamed Cheriet.

Side-Information based Exponential Discriminant Analysis for Face Verification in the Wild.

Biometrics in the Wild, Automatic Face and Gesture Recognition Workshop, 2015.

[pdf]

-

Cloudwalk

Brief method description:

Our system has a complete pipeline for face recognition and each component is implemented by ourselves. In test, we used original LFW images. It automatic detect the right face, make face alignment and warp face to fixed size 128*128. During feature extraction, 6 DCNN models were trained respectively using different data augmentation methods with training set of 25,580 subjects, 2,545,659 face images (no LFW subjects were used for training or fine-tuning).

[webpage]

[commercial system, see note at top]

-

Beijing Faceall Co., Ltd

Brief method description:

We followed the Unrestricted, Labeled Outside Data protocol and build our system which consists of face detection, face alignment, face feature extraction and face matching, all by ourselves. We have trained 3 models for face feature extraction on our own dataset with 4260000 images of more than 60000 identities (no LFW subjects were used for training or fine-tuning). In the test procedure, we used original LFW images, which are detected with our face detection system and auto-aligned with our alignment system. After extracting 3 feature vectors from the 3 trained models, we concatenate the vectors directly and calculate the Euclidean distance of testing images as similarity measurement.

[webpage]

[commercial system, see note at top]

-

JustMeTalk

Brief method description:

We collected 1.5 million photos, and eliminate the face photos in database of LFW. Based on deep convolution networks, we have trained 4 feature extractors, and fused features through metric learning. During the test, we follow the unrestricted, labeled outside data protocol.

[webpage]

[commercial system, see note at top]

-

Lilei Zheng, Khalid Idrissi, Christophe Garcia, Stefan Duffner, and Atilla Baskurt.

Triangular Similarity Metric Learning for Face Verification.

International Conference on Automatic Face and Gesture Recognition (FG), 2015.

[www]

-

Facevisa

Brief method description:

We follow the unrestricted, labeled outside data protocol. We collected more than 2 million face photos, and eliminate the photos in database of LFW. We assemble features extracted from the common deep CNNs and other more efficient deep CNNs which we devised and named as simple looping structured CNNs and accumulated looping structured CNNs. Face images are compared by L2 distance after feature embedding.

[webpage]

[commercial system, see note at top]

-

Iacopo Masi, Anh Tuan Tran, Jatuporn Toy Leksut, Tal Hassner and Gerard Medioni.

Do We Really Need to Collect Millions of Faces for Effective Face Recognition?

European Conference on Computer Vision (ECCV), 2016

[pdf]

[www]

-

ColorReco

Brief method description:

We use Chinese Face data set to train our algorithm by Deep Convolutional neural network, and crop the model to ensure it can run on mobile phone real-time. In test, we have used original LFW images, converted to greyscale, auto-aligned with our face detector and alignment system and followed unrestricted protocol with labeled outside data:unrestricted protocol, labeled outside data results, LFW was not used for training or fine-tuning.

[webpage]

[commercial system, see note at top]

-

Asaphus Vision

Brief method description:

Our processing pipeline is optimized for fast execution on embedded processors. We use face detection, alignment, 3D frontalization, and a convolutional network that has been trained on web-collected and infrared images and has been optimized for low execution time. We use the LFW data for evaluation, but not for training or tuning.

[webpage]

[commercial system, see note at top]

-

Daream

Brief method description:

We followed the unrestricted, labeled outside data protocol and our system contains our internal off-the-shelf face detection, face alignment and face verification algorithm. Specifically, after alignment all the faces to the canonical shape, we use residual network, wide-residual network, highway path network and Alexnet to extract the face representation. Each of them are trained on our own data set, which contains 1.2 million images more than 30000 persons (no overlapping with LFW subjects and images). The feature vector from 4 DNNs are concatenated and feeded to train the Joint Bayesian Model. All the parameters are tuned on our own validation set and we didn’t fine tune the model on LFW dataset. In test phase, we directly use original LFW images and process all the images with our end to end pipeline.

[webpage]

[commercial system, see note at top]

-

Meng Xi, Liang Chen, Desanka Polajnar, and Weiyang Tong.

Local Binary Pattern Network: A Deep Learning Approach for Face Recognition.

International Conference on Image Processing (ICIP), 2016.

[www]

-

Dahua-FaceImage

Brief method description:

We followed the Unrestricted, Labeled Outside Data protocol and build our system which has a complete pipeline for face recognition by ourselves. We collected a dataset with 2 million images of more than 20,000 persons, which has no intersection with LFW. 30 deep CNN model were trained on our own dataset and features of each model were combined with Joint Bayesian.

[webpage]

[commercial system, see note at top]

-

Easen Electron

Brief method description:

We followed unrestricted, labeled outside data protocol and built face verification system with our own face detection and alignment algorithms. We used training dataset containing 3.1M face images of 59K persons collected from the Internet, which has no intersection with LFW dataset. We trained six deep CNN models, one of which is the Inception-Resent-like network and the other five are Resnet-50 networks, each operating on a different face patch. These models achieved 99.78%, 99.72%, 99.50%, 98.97%, 99.48% and 99.28% accuracy, respectively. In test we used original LFW, and feature vectors from six deep CNN models are concatenated and transformed to low-dimensional vector by metric learning. After feature extraction, the distance between two feature vectors is measured by Euclidean distance.

[webpage]

[commercial system, see note at top]

-

Skytop Gaia

Brief method description:

We followed the Unrestricted-Label-Free Outside Data protocol and we build our system on four face alignment algorithms to meet the needs of different scenarios: the trade-off between detect speed and accuracy, which are all based on deep CNNs. The whole system is trained on our own dataset,which is set up with Shenzhen University. our training set contains 921,600 face images of 18,000 individuals. we divide it into two parts:training data set and test dataset. Our own dataset has no intersection with LFW. The proportion of the training data set and test data set of about 1:10.

[webpage]

[commercial system, see note at top]

-

Anh Tuan Tran, Tal Hassner, Iacopo Masi and Gerard Medioni.

Regressing Robust and Discriminative 3D Morphable Models with a very Deep Neural Network

arXiv:1612.04904, 15 Dec 2016

[pdf]

[www]

-

Samtech Facequest

Brief method description:

Our system is built on database consisting a major proportion of Indian faces collected from Indian websites and internet companies. Our database has no intersection with LFW identities. We follow unrestricted and labeled outside database for our system. We use 200,000 identities with 10 million images for training 5 models of deep convolution networks. We do a fusion of the 5 feature vectors followed by PCA for dimensional reduction. To calculate average accuracy on LFW 10-folds, for every set we calculate the accuracy using the best threshold from rest of the 9 sets.

[webpage]

[commercial system, see note at top]

-

Juha Ylioinas, Juho Kannala, Abdenour Hadid, and Matti Pietikäinen.

Face Recognition Using Smoothed High-Dimensional Representation

Scandinavian Conference on Image Analysis, 2015

[pdf]

-

Christos Sagonas, Yannis Panagakis, Stefanos Zafeiriou, and Maja Pantic.

Robust Statistical Frontalization of Human and Animal Faces

International Journal of Computer Vision (IJCV), July 2016

[pdf]

-

XYZ Robot

Brief method description:

We followed the unrestricted, labeled outside data protocol. Our system has a complete pipeline for face recognition including face detection, face alignment, face normalization and face matching, all implemented by ourselves. We trained 6 DCNNs models for feature extraction on our own datasets which contain two datasets. One of two datasets includes some dirty 500,000 face images of 10,000 individuals, the other is clean 300,000 images of 6000 individuals (no LFW subjects were used for training or fine-tuning). We concatenate feature vectors from 6 DCNNs model with PCA to train Joint Bayesian on our datasets. In test, we have used original LFW images, detected face, auto-aligned and auto-normalized with our system.

[webpage]

[commercial system, see note at top]

-

THU CV-AI Lab

Brief method description:

We followed the Unrestricted, Labeled Outside Data protocol and build our system with our own face detector and alignment. There are two dataset used in our training step, none of them has intersection with LFW. A dataset with 6 million images of more than 80,000 persons is used for deep convolution networks training, the other dataset with 400,000 images of 10,000 individuals is used for Metric Learning, which is for feature dimension reduction instead of PCA. 11 deep CNN models are trained. In test, we have used original LFW images, concatenate feature vectors from 11 models after dimension reduction and used the L2 distance for comparing the given pairs.

[webpage]

[commercial system, see note at top]

-

PingAn AI Lab

Brief method description:

We followed the unrestricted labeled outside data protocol and built our system using our face recognition pipeline. Our dataset including 3 million images of more than 60,000 individuals collected from Internet, which has no intersection with LFW dataset. We trained 3 improved Inception-Resnet-like network models. With the combined features, we directly use original LFW images and processed all the images with our end to end system in test.

[webpage]

[commercial system, see note at top]

-

dlib - open source maching learning library

Brief author's description:

As of February 2017, dlib includes a face recognition model. This model is a ResNet network with 27 conv layers. It's essentially a version of the ResNet-34 network from the paper Deep Residual Learning for Image Recognition by He, Zhang, Ren, and Sun with a few layers removed and the number of filters per layer reduced by half.

The network was trained from scratch on a dataset of about 3 million faces. This dataset is derived from a number of datasets. The face scrub dataset, the VGG dataset, and then a large number of images I scraped from the internet. I tried as best I could to clean up the dataset by removing labeling errors, which meant filtering out a lot of stuff from VGG. I did this by repeatedly training a face recognition CNN and then using graph clustering methods and a lot of manual review to clean up the dataset. In the end about half the images are from VGG and face scrub. Also, the total number of individual identities in the dataset is 7485. I made sure to avoid overlap with identities in LFW.

The network training started with randomly initialized weights and used a structured metric loss that tries to project all the identities into non-overlapping balls of radius 0.6. The loss is basically a type of pair-wise hinge loss that runs over all pairs in a mini-batch and includes hard-negative mining at the mini-batch level.

The code to run the model is publically available on dlib's github page. From there you can find links to training code as well.

[webpage]

-

Cyberextruder - Aureus 5.6

Brief author's description:

We followed the unrestricted labelled outside data protocol using our in-house trained face detection, landmark positioning, 2D to 3D algorithms and face recognition algorithm called Aureus. We trained our system using 3 million images of 30 thousand people. Care was taken to ensure that no training images or people were present in the totality of the LFW dataset. The face recognition algorithm utilizes a wide and shallow convolution network design with a novel method of non-linear activation which results in a compact, efficient model. The algorithm generates 256 byte templates in 100 milliseconds using a single 3.4GHz core (no GPU required). Templates are compared at a rate of 14.3 million per second per core.

[webpage]

[commercial system, see note at top]

-

Beijing Orion Star Technology Co., Ltd.

Brief author's description:

We followed the unrestricted labeled outside data protocol and built our face recognition system. We collected a dataset from Internet with 4 million images of more than 80000 people, which has no intersection with the LFW dataset. We trained only one CNN model with Resnet-50, and use the Euclidean distance to measure the similarity of two images. In test, we process the original LFW images with our own system.

[webpage]

[commercial system, see note at top]

-

Yuntu WiseSight

Brief author's description:

We followed the unrestricted labelled outside data protocol to build our face recognition system. We collected about 5 million images from internet of more than 50 thousand individuals. These images have been cleaned, so the dataset has no intersection with the LFW . We trained only one ResNet network. In test, we used original LFW images. After processing these images with our own face detection and face alignment, we flipped every face horizontally. we combined the features and used PCA for feature dimension reduction.

[webpage]

[commercial system, see note at top]

-

Turing123

Brief author's description:

The model of our system is a single straightforward deep Convolutional Neural Network with 95M parameters before compression, embedding dimension is 256. Multi-loss is used to enhance discriminative power of the deeply learned features during training process. The training and test follow the Unrestricted, Labeled Outside Data protocol. 4 million images of nearly 40 thousand individuals is used to train our model for feature extraction, most of them are web crawling image. We removed individuals intersects with LFW, and cleaned the labeling error by repeatedly graph clustering, some efforts of manual cleaning up has also been made. In result report procedure, we strictly calculate average accuracy for every set using the best threshold from the rest of 9 sets on 10-folds. Given Face pairs are compared by cosine similarity distance after feature embedding.

[webpage]

[commercial system, see note at top]

-

Hisign Technology

Brief author's description:

We follow the unrestricted, labeled outside data protocol. Three different models were trained on our own dataset, which contains about 10,000 individuals and 1 million face images (no overlapping with LFW subjects and images). The face is represented as the concatenation of these feature vectors. We used original LFW images and processed all the images with our end to end system in test.

[webpage]

[commercial system, see note at top]

-

Deepmark

Brief author's description:

We followed the unrestricted labelled outside data protocol. We trained our system using ~5 millions images of 70 thousand people. There was no intersection of LFW with training dataset. We use efficient feature maps and joint triple loss function due training which results in very fast and fairly accurate model. Feature extraction takes 400 ms on Raspberry PI 3.

[webpage]

[commercial system, see note at top]

-

Force Infosystems

Brief author's description:

We use database with 20,000 identities and 2 million images without any intersection with identities in LFW. We follow unrestricted and labeled outside data strategy. We trained using single deep convolution model followed by a novel feature training approach to generate discriminative feature output. Our network along with face detection run in real time on CPU.

[webpage]

[commercial system, see note at top]

-

ReadSense

Brief author's description:

We followed the unrestricted, labeled outside data protocol. The data for training the face recognition and verification net include 4 million images of more than 70,000 individuals collected from the Internet, which has no intersection with the LFW dataset. Four DNN models of improved ResNet-like network were trained and combined with multi-loss. Our end-to-end system consists of face detection, alignment, feature extraction and feature matching. We use cosine distance to measure the similarity between two feature vectors and calculate average accuracy for each subset using the best threshold from the rest of 9 subsets on 10-folds.

[webpage]

[commercial system, see note at top]

-

CM-CV&AR

Brief author's description:

We followed the unrestricted, labeled outside data protocol. We used a dataset of ~8 million images with 80,000 individuals collected from the internet, with no intersection with the LFW dataset. A single ResNet-like CNN model with 100 layers was trained with multi-loss. Our system consists of face detection, alignment, feature extraction and feature matching. During test, we use cosine distance as similarity measurement.

[webpage]

[commercial system, see note at top]

-

sensingtech

Brief author's description:

The system consists of a workflow of face detection, face landmark, feature extraction, and feature matching, all using our own algorithm. 13 face feature extraction models were trained using a deep CNN network with a training set of 800,000 face images of 20,000 individuals (no LFW subjects are included in the training set). The face matching module was trained using a deep metric learning network, where a face is represented as the concatenation of the 13 feature vectors. The training and test follow the Unrestricted, Labeled Outside Data protocol.

[webpage]

[commercial system, see note at top]

-

Glasssix

Brief author's description:

We followed the Unrestricted, Labeled Outside Data protocol. The ratio of White to Black to Asian is 1:1:1 in the train set, which contains 100,000 individuals and 2 million face images. We spent a week training the networks which contain a improved resnet34 layer and a improved triplet loss layer on a Dual Maxwell-Gtx titan x machine with a four-stage training method.

[webpage]

[commercial system, see note at top]

-

icarevision

Brief author's description:

We followed the unrestricted, labeled outside data protocol.We collected a 2 million images of more than 80,000 individuals from the Internet,which has no intersection with the LFW dataset.We trained a single ResNet-like network with softmax loss. We only used one model to extract the features.Cosine distance is used to measure the similarity between two features.

[webpage]

[commercial system, see note at top]

-

yunshitu

Brief author's description:

We followed the unrestricted, labeled outside data protocol. The training dataset contains 100,000 individuals with 10 million face images after data augmentation (no LFW subjects are included in the training set). We trained a single model with improved r100 CNN(280MB) and two angle-based loss under curriculum learning. Cosine distance is employed to measure the similarity of test features in pairs.

[webpage]

[commercial system, see note at top]

-

RemarkFace

Brief author's description:

We followed the unrestricted labelled outside data protocol to build our face recognition system. We collected a dataset from Internet with 5 million images of more than 70000 people, which has no intersection with the LFW dataset. We built face verification system with our own face detection and alignment algorithms,which are all based on deep CNNs. We trained only one inception-resnet-like network. Using the best threshold from the rest of 9 sets on 10-folds and the L2 distance for the given pairs comparision, we strictly calculate average accuracy of each set in final result report.

[webpage]

[commercial system, see note at top]

-

IntelliVision

Brief author's description:

We followed the unrestricted, labeled outside data protocol for our face recognition system. Our dataset consists of 80,000 identities and 8 million images. It does not have intersection with identities of LFW. Our face recognition system runs end to end on deep CNN models including face detection, face alignment and feature extraction.

[webpage]

[commercial system, see note at top]

-

senscape

Brief author's description:

We followed the unrestricted labeled outside data protocol and built our commercial face recognition system. The training data includes about 400,000 face images of 10,000+ individuals, which has no intersection with the LFW dataset. We trained only one modified Resnet model with 27 convolution layers. The output feature dimension of our model is 256, and the Euclidean distance is used to measure the similarity of images.

[webpage]

[commercial system, see note at top]

-

4th Institute of Meiya Pico

Brief author's description:

We follow the Unrestricted, Labeled Outside Data protocol. We collected a dataset from internet with 3 million images of more than 60 thousand individuals, which has no intersection with the LFW dataset. Our model is a ResNet network with 20 layers, using 2 loss functions. During the test, we used original LFW images and we strictly calculated average accuracy for every set using the best threshold from the rest of 9 sets on 10 folds (LFW was not used for training or fine-tuning).

[webpage]

[commercial system, see note at top]

-

Faceter.io

Brief author's description:

We used two slightly modified ResNet-34 DNN models. Our training dataset contains about 3.2M samples and 40K classes, which doesn't have intersection with LFW. We trained model with multi-loss and then finetuned by tripletloss. One model achieved 99.75% and another one 99.72% accuracy. We concatenated feature vectors and calculate the Euclidean distance of testing images as similarity measurement. The final mean accuracy is 99.78%.

[webpage]

[commercial system, see note at top]

-

Pegatron

Brief author's description:

We collected 8 million face images that include 130k individuals, and remove the overlap with LFW faces, then we aligned and rotated, cropped the face to gray images for training the 27 layers resnet like CNN. And we extract the features from CNN then do cosine similarity between two persons.

[webpage]

[commercial system, see note at top]

-

CHTFace, Chunghwa Telecom

Brief author's description:

We follow the Unrestricted, Labeled Outside Data protocol. Our system consists of a workflow of face detection, face alignment, face feature extraction, and face matching. We trained a single DNN model using softmax loss and some additional regularization losses. We used MSCeleb1M as our training data. The identities overlapped with LFW were removed and only 40k of the remaining ones were chosen for training. The face feature dimension is 128. The similarities between image pairs were measured with the Euclidean distance in the feature spaces.

[webpage]

[commercial system, see note at top]

-

FRDC

Brief author's description:

We followed the unrestricted, labeled outside data protocol. The training data includes 5 million images of more than 70,000 individuals collected from the Internet, which has no intersection with the LFW dataset. We trained a single ResNet-like network with multi-loss. Our end-to-end system consists of face detection, alignment, feature extraction and feature matching. We only used one model to extract the features, and the cosine distance was used to measure the similarity between two feature vectors. The average accuracy for each subset was calculated using the best threshold from the rest of 9 subsets on 10-folds.

[webpage]

[commercial system, see note at top]

-

Meryem Uzun-Per, Muhittin Gökmen.

Face recognition with Patch-based Local Walsh Transform.

Signal-Processing: Image Communication, vol. 61, pp. 85-96, February 2018

[pdf]

-

YI+AI

Brief author's description:

We follow the Unrestricted, Labeled Outside Data protocol. Our system consists of face detection, face alignment and face descriptor extraction. We train a CNN model using multiple losses and a training dataset with approximately 2M images from multiple sources, containing 80K people (the training dataset has no intersection with LFW). At test time we use original LFW images processed by our production pipeline and applied a simple L2 norm. The similarity between image pairs is measured with the Euclidean distance.

[webpage]

[commercial system, see note at top]

-

Aratek

Brief author's description:

We followed unrestricted, labeled outside data protocol and built face verification system with our face detection and alignment algorithms. We used training dataset containg 3.3 million images of more than 9000 persons, which has no intersection with LFW. The deep CNN models we trained is the inception resnet v1 network. The embedding dimension is 512, and the distance metric is cosine similarity.

[webpage]

[commercial system, see note at top]

-

Cylltech

Brief author's description:

We followed the unrestricted labeled outside data protocol. The training dataset including 3 million images of more than 60,000 individuals collected from Internet, which has no intersection with LFW dataset. Deeper residual CNN was trained. We use original LFW images and processed all the images with our end to end system.

[webpage]

[commercial system, see note at top]

-

Emrah Basaran, Muhittin Gökmen, and Mustafa E. Kamasak.

An Efficient Multiscale Scheme Using Local Zernike Moments for Face Recognition.

Applied Sciences, vol. 8, no 5, 2018

[pdf]

-

TerminAI

Brief author's description:

We followed the unrestricted, labeled outside data protocol to build our own face recognition system, which consists of face detection, alignment, feature extraction and feature matching. We trained a single Resnet-like model with angle-based loss to extract the features. The training set contains 80,000 individuals with more than 3.5 million face images, which has no intersection with the LFW dataset. In test, we processed the original LFW images with our own system and cosine distance is used to measure the similarity between two 512-dimension feature vectors.

[webpage]

[commercial system, see note at top]

-

ever.ai

Brief author's description:

We followed the unrestricted, labeled outside data protocol. Our model is trained on a private photo database with no intersection with LFW. We trained custom face and landmark detectors for preprocessing and built our primary face recognition model on a dataset containing over 100k ids and 10M images. The recognition model is a single deep resnet which outputs an embedding vector given an input image, and similarity between a pair of images is evaluated via an l2-norm distance between their respective embeddings.

[webpage]

[commercial system, see note at top]

-

Camvi Technologies

Brief author's description:

We followed the unrestricted, labeled outside data protocol. Our model is trained on a subset of Microsoft 1M face dataset, containing around 80K identities and 5 Million faces. We tried our best to remove the overlapped faces in LFW with close similarity scores. The recognition model is a single CNN network with size of 230 MBytes which outputs an embedding vector with 256 float point numbers for an input image. We use l2 distance to measure the similarity between two feature vectors and calculate average accuracy for each subset using the best threshold from the rest of 9 subsets on 10-folds.

[webpage]

[commercial system, see note at top]

-

IFLYTEK-CV

Brief author's description:

We followed the Unrestricted, Labeled Outside Data protocol. MS-Celeb-1M dataset is used as training set which contains about 85000 individuals and 3.8 million face images.We tried our best to clean overlapped ID or similar faces with LFW. We simply use resnet-like deep networks to train face model. In TEST phase, we only use unflipped LFW images to evaluate. With a single model, we can reach 0.9980 mean accuracy.

[webpage]

[commercial system, see note at top]

-

DRD, CTBC Bank

Brief author's description:

We follow the Unrestricted, Labeled Outside Data protocol. We trained a single DNN model using angular distance loss. The training data is a subset (around 80K identities, 5M images) of MS1M that excludes the overlapped data with LFW.

[webpage]

[commercial system, see note at top]

-

Innovative Technology Ltd, United Kingdom

Brief author's description:

Our Model is based on a deep residual neural network trained in-house on over 5 million images, which is then embedded on to our Image Capture Unit (ICU). Each face is represented using a feature vector of 512 values. We use cosine distance to measure the similarity between two feature vectors and calculate average accuracy for each subset using the best threshold from the rest of 9 subsets on 10-folds. Specifically, the training and test follow the Unrestricted, Labelled Outside Data protocol.

[webpage]

[commercial system, see note at top]

-

Oz Forensics

Brief author's description:

We followed the Unrestricted, Labeled Outside data protocol. Original LFW images were used. Processing pipeline included our face and landmarks detector. Images were aligned by only two points - left and right eye. We used Arcface loss for training. The training set has over 3 million images and 30k persons and it does not have intersection with LFW dataset.

[webpage]

[commercial system, see note at top]