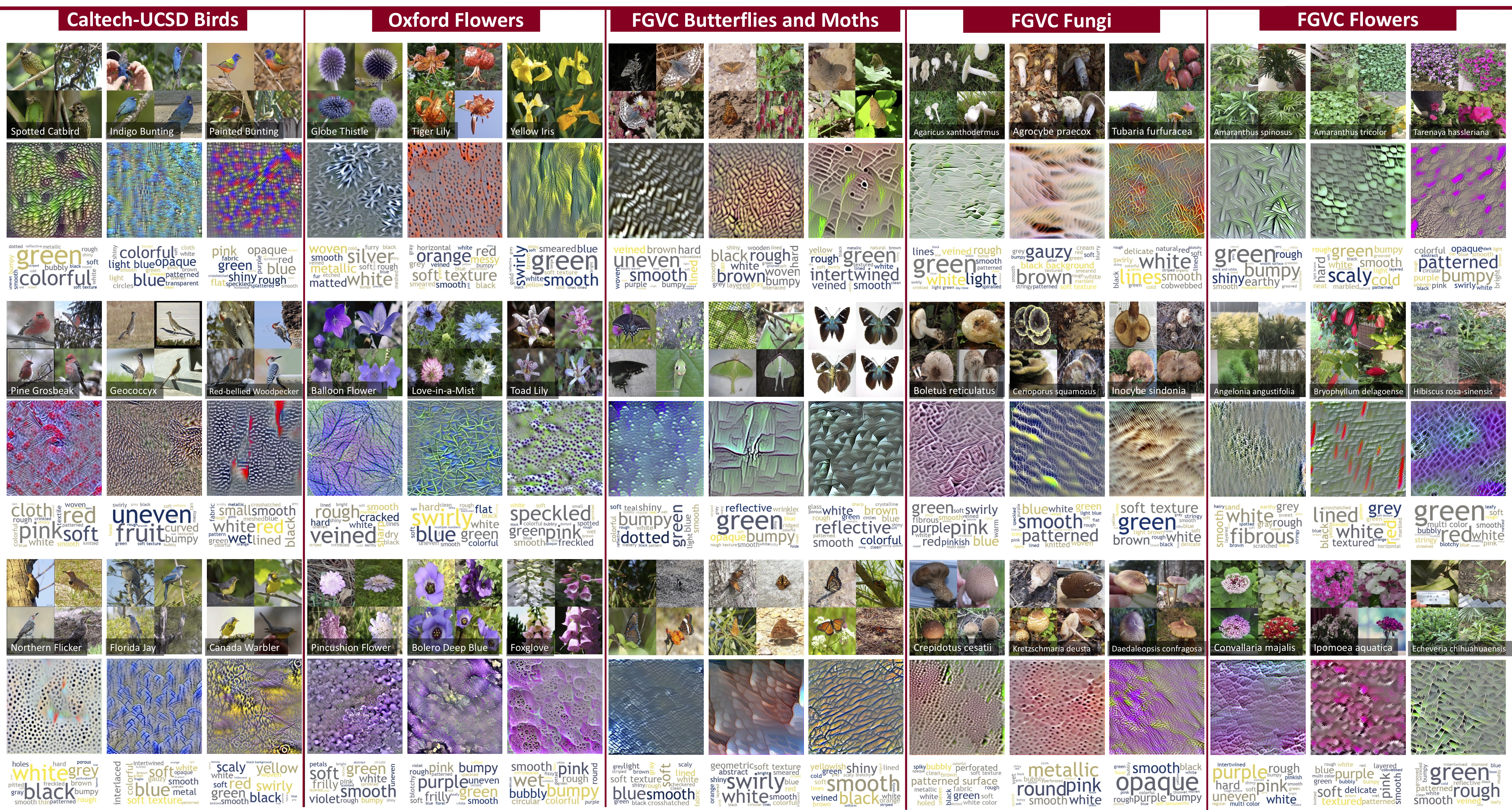

Visualizing and Describing Fine-grained Categories as Textures

Abstract

We analyze how categories from recent FGVC challenges can be described by their textural content. The motivation is that subtle differences between species of birds or butterflies can often be described in terms of the texture associated with them and that several top-performing networks are inspired by texture-based representations. These representations are characterized by orderless pooling of second-order filter activations such as in bilinear CNNs and the winner of the iNaturalist 2018 challenge.

Concretely, for each category we (i) visualize the "maximal images" by obtaining inputs x that maximize the probability of the particular class according to a texture-based deep network, and (ii) automatically describe the maximal images using a set of texture attributes. The models for texture captioning were trained on our ongoing efforts on collecting a dataset of describable textures building on the DTD dataset. These visualizations indicate what aspects of the texture is most discriminative for each category while the descriptions provide a language-based explanation of the same.

People

FGVC6

Extended AbstractPoster

Related Links

Visualizing and Understanding Deep Texture RepresentationsTexture and Natural Language Dataset

References

- Bilinear CNNs for Fine-grained Visual Recognition, T-Y Lin, A. RoyChowdhury, S. Maji, PAMI 2017

- Visualizing and Understanding Deep Texture Representations, T-Y Lin, S. Maji, CVPR 2016

- Describing Textures in the Wild, M. Cimpoi, S. Maji, I. Kokkinos, S. Mohamed, A. Vedaldi, CVPR 2014