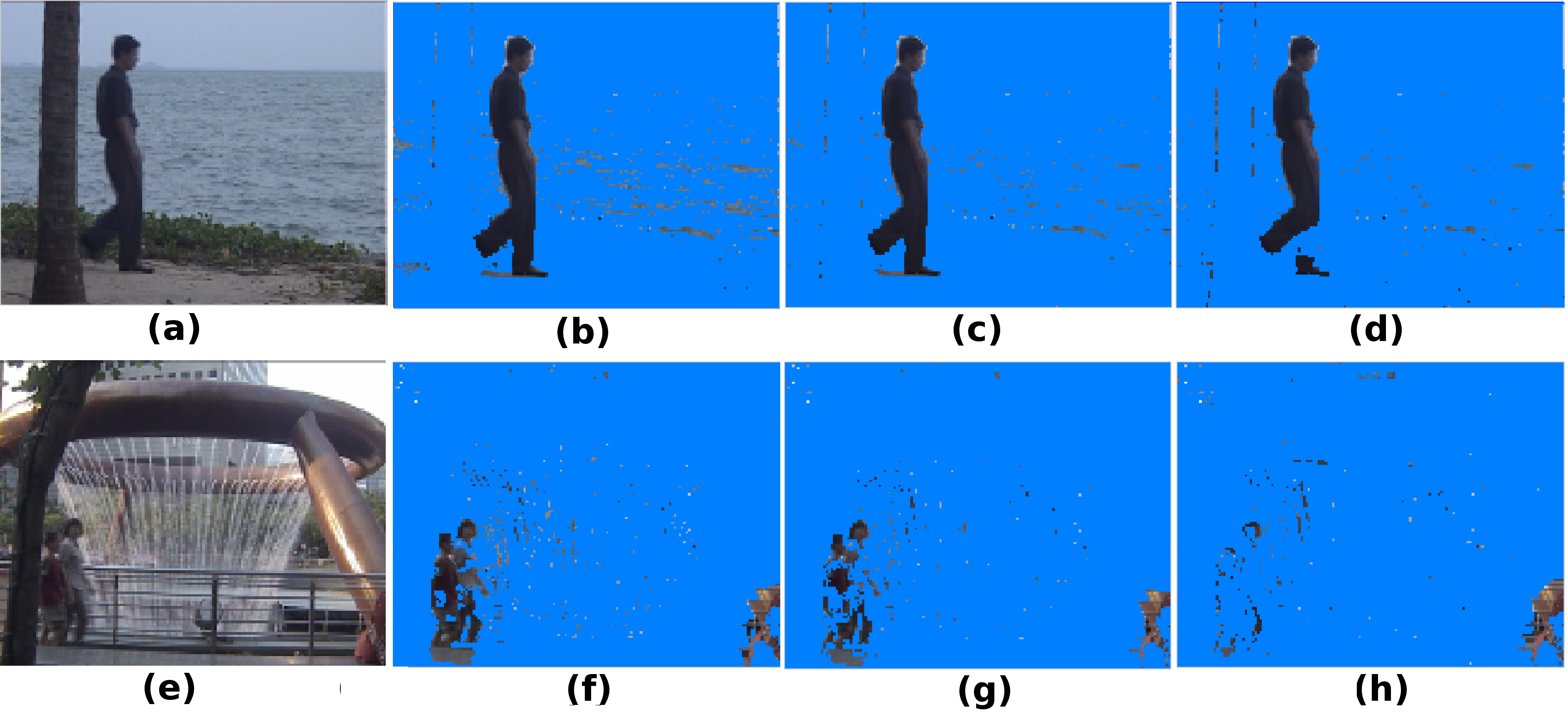

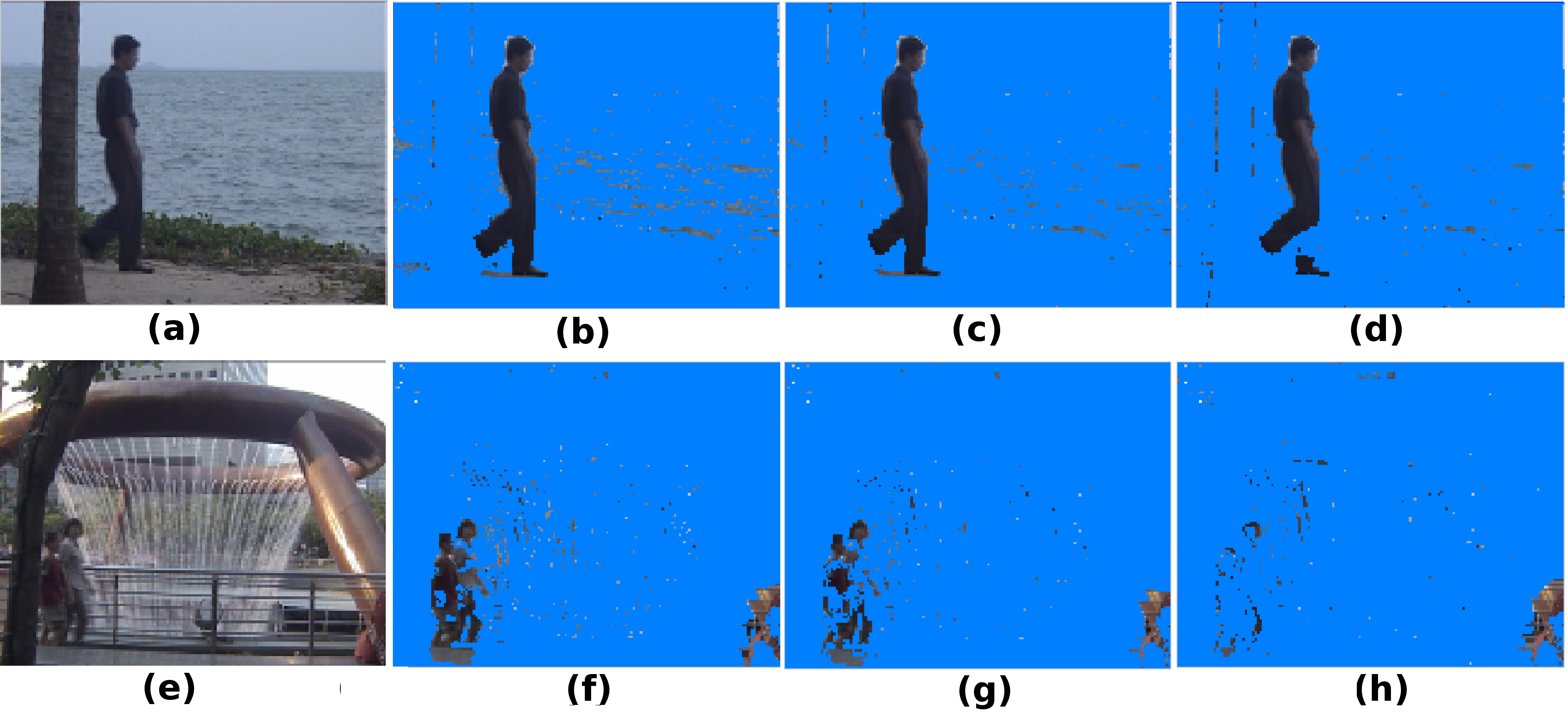

Background Subtraction

Overview

Recent work on background subtraction has shown developments

on two major fronts. In one, there has been

increasing sophistication of probabilistic models, from mixtures

of Gaussians at each pixel, to kernel density estimates

at each pixel, and more recently to joint domainrange

density estimates that incorporate spatial information

. Another line of work has shown the benefits of

increasingly complex feature representations, including the

use of texture information, local binary patterns, and recently

scale-invariant local ternary patterns. In this

work, we use joint domain-range based estimates for background

and foreground scores and show that dynamically

choosing kernel variances in our kernel estimates at each

individual pixel can significantly improve results. We give a

heuristic method for selectively applying the adaptive kernel

calculations which is nearly as accurate as the full procedure

but runs much faster. We combine these modeling

improvements with recently developed complex features

and show significant improvements on a standard backgrounding

benchmark.

Faculty

Graduate Students

References

-

Manjunath Narayana, Allen Hanson, and Erik Learned-Miller.

Improvements in Joint Domain-Range Modeling for Background Subtraction

Proceedings of the British Machine Vision Conference (BMVC), 2012.

[pdf]

-

Manjunath Narayana, Allen Hanson, and Erik Learned-Miller.

Background Modeling using Adaptive Pixelwise Kernel Variances in a Hybrid Feature Space

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2012.

[pdf]